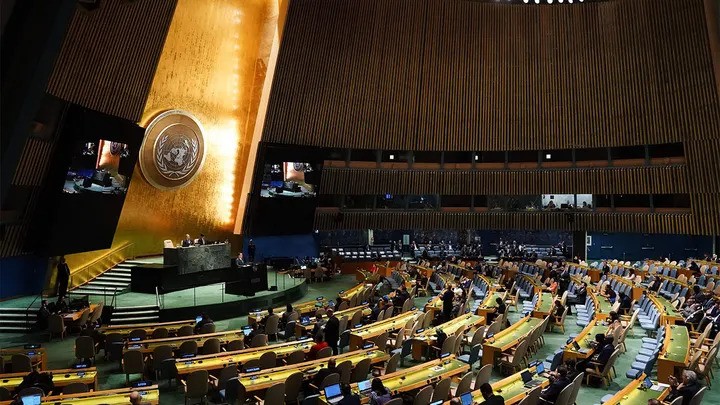

The United Nations’ recent recommendations on AI safety are sparking debate over potential regulatory overreach. Last week, the UN’s High-level Advisory Body on Artificial Intelligence unveiled seven guidelines aimed at addressing AI-related risks globally. However, experts argue that these suggestions lack specificity and may miss crucial regional considerations.

“They didn’t really say much about the unique role of AI in different parts of the world,” says Phil Siegel, co-founder of the Center for Advanced Preparedness and Threat Response Simulation (CAPTRS). “I think they needed to be a little more aware that different economic structures and different regulatory structures that already exist are going to cause different outcomes.”

Siegel also questions whether a universal approach to AI regulation is practical, given the distinct needs of various regions. “They could have done a better job of…being a little more specific around what does a state like the United States, what is unique there? How does what we do in the United States impact others, and what should we be looking at specifically for us?”

A Call for Tailored Solutions

Siegel highlights the difference in regulatory environments, particularly between the U.S. and Europe. “Same thing with Europe. They have much more strict privacy needs or rules in Europe,” he notes. “What does that mean? I think it would have gained them a little bit of credibility to be a little more specific around the differences that our environments around the world cause for AI.”

On September 19, the Advisory Body published its AI governance proposals, suggesting actions like establishing an International Scientific Panel on AI, a global AI capacity development network, and an AI office within the UN Secretariat. While Siegel acknowledges these measures as steps toward global governance, he remains skeptical of their feasibility. “If you want to take it at face value, I think what they’re doing is saying some of these recommendations that different member states have come up with have been good, especially in the European Union, since they match a lot of those.”

The Risk of Overreach

While some see the UN as a natural forum for AI coordination, others worry about the risks of overreach.

“They probably should be coordinated through the U.N., but not with rules and kind of hard and fast things that the member states have to do, but a way of implementing best practices,” Siegel suggests.

Siegel also raises concerns about the UN’s intentions, given its attempts to extend influence in other domains. “I think there’s a little bit of a trust issue with the United Nations given they have tried to, as I said, gain a little bit more than a seat at the table in some other areas and gotten slapped back.”

Finding the Right Forum

With significant advancements already achieved by the U.S., Europe, and Asia in AI safety, Siegel questions whether the UN is the best forum for this role. He suggests a more supportive rather than authoritative approach, saying, “I just don’t know if the U.N. is the right place to convene to make that happen, or is it better for them to wait for these things to happen and say, ‘We’re going to help track and be there to help’ rather than trying to make them happen.”