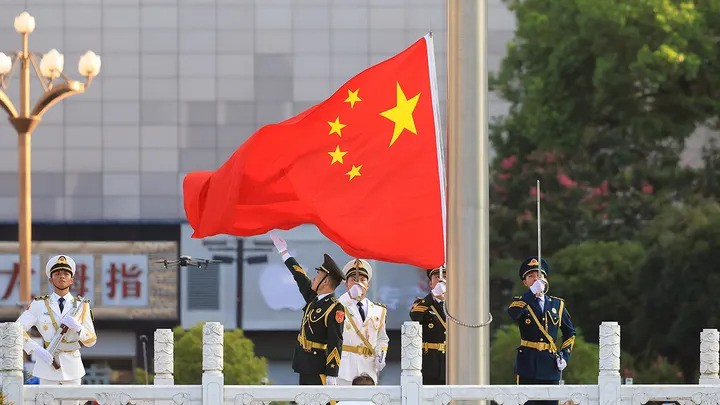

In a surprising move, China declined to sign an international “blueprint” aimed at establishing guidelines for the military use of artificial intelligence (AI). The agreement, backed by around 60 nations including the United States, seeks to set guardrails in the rapidly evolving field of AI weaponry.

Over 90 countries attended the Responsible Artificial Intelligence in the Military Domain (REAIM) summit in South Korea this week, but nearly a third did not support the nonbinding proposal.

Why China Opted Out

AI expert Arthur Herman, senior fellow and director of the Quantum Alliance Initiative at the Hudson Institute, told Fox News Digital that China’s reluctance is likely due to its general opposition to multilateral agreements it doesn’t orchestrate.

“What it boils down to … is China is always wary of any kind of international agreement in which it has not been the architect or involved in creating and organizing how that agreement is going to be shaped and implemented,” Herman said.

“I think the Chinese see all of these efforts, all of these multilateral endeavors, as ways in which to try and constrain and limit China’s ability to use AI to enhance its military edge.”

The Importance of Human Control in Military AI

The summit and the blueprint endorsed by dozens of nations aim to safeguard the expanding technology surrounding AI by ensuring there is always human control over systems, especially in military and defense contexts. Herman explained, “The algorithms that drive defense systems and weapons systems depend a lot on how fast they can go,” he said. “[They] move quickly to gather information and data that you then can speed back to command and control so they can then make the decision.

He emphasized the critical need for human oversight: “The speed with which AI moves … that’s hugely important on the battlefield,” he added. “If the decision that the AI-driven system is making involves taking a human life, then you want it to be one in which it’s a human being that makes the final call about a decision of that sort..”

Potential Implications for Global Security

Nations leading in AI development, like the U.S., stress the importance of maintaining a human element in battlefield decisions to avoid unintended casualties and prevent machine-driven conflicts. While the summit was co-hosted by the Netherlands, Singapore, Kenya, and the United Kingdom, and marked the second gathering after last year’s meeting in the Dutch capital, China’s absence from the agreement raises concerns.

It remains unclear why China and about 30 other countries chose not to endorse the building blocks intended to set up AI safeguards. This decision is particularly puzzling since Beijing supported a similar “call to action” during last year’s summit.

China’s Stance and Future Actions

When questioned during a Wednesday press conference, Chinese Foreign Ministry spokesperson Mao Ning stated that China had sent a delegation to the summit, where it “elaborated on China’s principles of AI governance.” Mao pointed to the “Global Initiative for AI Governance” proposed by President Xi Jinping in October, saying it “gives a systemic view on China’s governance propositions.” However, she did not explain why China did not back the nonbinding blueprint introduced this week, adding only that “China will remain open and constructive in working with other parties and deliver more tangibly for humanity through AI development.”

Herman warned that while nations like the U.S. and its allies seek to establish multilateral agreements for safeguarding AI in military use, such efforts might not deter adversarial countries from developing potentially harmful technologies.

“When you’re talking about nuclear proliferation or missile technology, the best restraint is deterrence,” he explained.

“You force those who are determined to push ahead with the use of AI—even to the point of basically using AI as kind of [a] automatic kill mechanism, because they see it in their interest to do so—the way in which you constrain them is by making it clear, if you develop weapons like that, we can use them against you in the same way.”

He concluded, “You don’t count on their sense of altruism or high ethical standards to restrain them, that’s not how that works.”